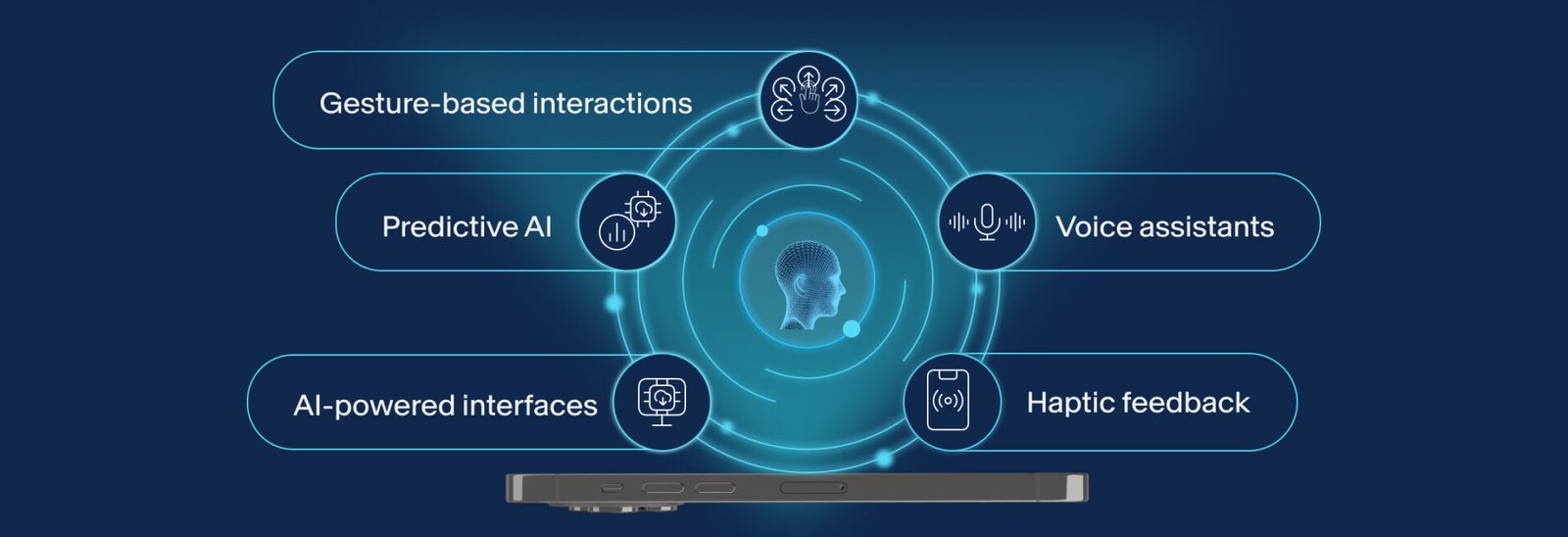

Hands-Free, Future-Ready: How Voice and Gesture-Based Interfaces Are Redefining User Experience

In a world where convenience, speed, and accessibility dominate user expectations, traditional taps and swipes are gradually giving way to more natural, intuitive interactions: voice and gesture-based interfaces.

From commanding smart homes with a whisper to navigating AR environments with a simple hand wave, these interfaces are revolutionizing how we interact with technology. And as devices become more context-aware and AI gets smarter, this trend is only accelerating.

What Are Voice and Gesture-Based Interfaces?

- Voice-based interfaces allow users to interact with devices using spoken language. Think of Alexa, Siri, or Google Assistant—but now baked into everything from cars to kitchen appliances.

- Gesture-based interfaces recognize physical movements (like hand waves or finger pinches) through sensors or cameras, enabling interaction without touch.

These are often bundled into multi-modal systems that combine voice, vision, and touch to create fluid user experiences.

Why They Matter in 2025

1. Touchless Tech Is Now a User Expectation

In the post-pandemic world, touchless interfaces have moved from novelty to necessity. Hospitals, retail stores, and public transport systems are adopting gesture-based kiosks and voice-activated systems to reduce physical contact.

2. Natural User Experience (NUI)

Both voice and gestures mimic how we naturally communicate, making them ideal for accessibility and inclusive design. No need to learn a UI—just speak or move.

3. Boosted by AI & Machine Learning

Modern voice interfaces understand context, emotion, and accents better than ever before, while gesture recognition has improved thanks to computer vision and deep learning.

Where These Interfaces Are Making an Impact

| Sector | Use Case Example |

|---|---|

| Smart Homes | Voice-activated lighting, music, and climate control |

| Automotive | Gesture-based infotainment control, voice navigation |

| Healthcare | Surgeons using gesture-controlled displays during procedures |

| Gaming & AR/VR | Full-body gesture input, voice-controlled commands |

| Retail & Kiosks | Contactless gesture navigation in public info displays |

Challenges to Overcome

- Noise Sensitivity & Accents – Voice recognition still struggles in noisy environments or with less common dialects.

- Hardware Requirements – Gesture interfaces need specific sensors (e.g., LiDAR, depth cameras), increasing costs.

- Privacy Concerns – Always-listening devices raise security and data protection issues.

The Road Ahead

As AI models continue to improve and devices become more sensor-rich, voice and gesture interfaces will feel less like features and more like standard modes of interaction. The goal? Seamless, frictionless experiences where technology adapts to you, not the other way around.

We’re moving into an era where the best UI may not be visible at all—and that’s a future worth paying attention to.

Final Thoughts

Voice and gesture-based interfaces are not just flashy gimmicks—they’re paving the way for a more inclusive, efficient, and immersive digital experience. As this tech matures, expect to see it woven into everyday life—from how we cook dinner to how we collaborate at work.

Leave a Comment